AI leaderboards are no longer useful. It's time to switch to Pareto curves

By Sayash Kapoor, Benedikt Stroebl, Arvind Narayanan

Which is the most accurate AI system for generating code? Surprisingly, there isn’t currently a good way to answer questions like these.

Based on HumanEval, a widely used benchmark for code generation, the most accurate publicly available system is LDB (short for LLM debugger).1 But there’s a catch. The most accurate generative AI systems, including LDB, tend to be agents,2 which repeatedly invoke language models like GPT-4. That means they can be orders of magnitude more costly to run than the models themselves (which are already pretty costly). If we eke out a 2% accuracy improvement for 100x the cost, is that really better?

In this post, we argue that:

AI agent accuracy measurements that don’t control for cost aren’t useful.

Pareto curves can help visualize the accuracy-cost tradeoff.

Current state-of-the-art agent architectures are complex and costly but no more accurate than extremely simple baseline agents that cost 50x less in some cases.

Proxies for cost such as parameter count are misleading if the goal is to identify the best system for a given task. We should directly measure dollar costs instead.

Published agent evaluations are difficult to reproduce because of a lack of standardization and questionable, undocumented evaluation methods in some cases.

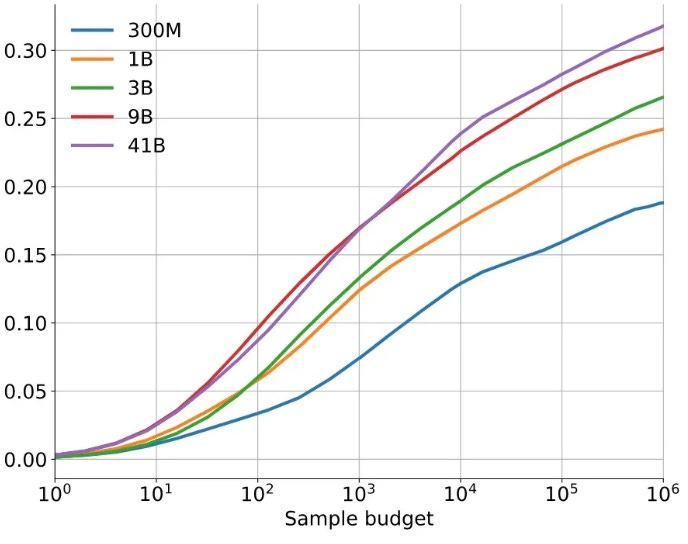

LLMs are stochastic. Simply calling a model many times and outputting the most common answer can increase accuracy.

On some tasks, there is seemingly no limit to the amount of inference compute that can improve accuracy.3 Google Deepmind's AlphaCode, which improved accuracy on automated coding evaluations, showed that this trend holds even when calling LLMs millions of times.

A useful evaluation of agents must therefore ask: What did it cost? If we don’t do cost-controlled comparisons, it will encourage researchers to develop extremely costly agents just to claim they topped the leaderboard.

In fact, when we evaluate agents that have been proposed in the last year for solving coding tasks, we find that visualizing the tradeoff between cost and accuracy yields surprising insights.

We re-evaluated the accuracy of three agents that have been claimed to occupy top spots on the HumanEval leaderboard: LDB, LATS, and Reflexion.4 We also evaluated the cost and time requirements of running these agents.

These agents rely on running the code generated by the model, and if it fails the test cases provided with the problem description, they try to debug the code, look at alternative paths in the code generation process, or "reflect" on why the model's outputs were incorrect before generating another solution.

In addition, we calculated the accuracy, cost, and running time of a few simple baselines:

GPT-3.5 and GPT-4 models (zero shot; no agent architecture5)

Retry: We repeatedly invoke a model with the temperature set to zero, up to five times, if it fails the test cases provided with the problem description.6 Retrying makes sense because LLMs aren’t deterministic even at temperature zero.

Warming: This is the same as the retry strategy, but we gradually increase the temperature of the underlying model with each run, from 0 to 0.5. This increases the stochasticity of the model and, we hope, increases the likelihood that at least one of the retries will succeed.

Escalation: We start with a cheap model (Llama-3 8B) and escalate to more expensive models (GPT-3.5, Llama-3 70B, GPT-4) if we encounter a test case failure.7

Surprisingly, we are not aware of any papers that compare their proposed agent architectures with any of the latter three simple baselines.

Our most striking result is that agent architectures for HumanEval do not outperform our simpler baselines despite costing more. In fact, agents differ drastically in terms of cost: for substantially similar accuracy, the cost can differ by almost two orders of magnitude!8 Yet, the cost of running these agents isn't a top-line metric reported in any of these papers.9

There is no significant accuracy difference between the warming strategy and the best-performing agent architecture. Yet, Reflexion and LDB cost over 50% more than the warming strategy,10 and LATS over 50 times more (all these costs are entirely or predominantly from calls to GPT-4, so these ratios will be stable even if model costs change). Meanwhile, the escalation strategy strictly improves accuracy while costing less than half of LDB (GPT-3.5) at current inference prices.11

Our results point to another underlying problem: papers making claims about the usefulness of agents have so far failed to test if simple agent baselines can lead to similar accuracy. This has led to widespread beliefs among AI researchers that complex ideas like planning, reflection, and debugging are responsible for accuracy gains. In fact, Lipton and Steinhardt noted a trend in the AI literature of failing to identify the sources of empirical gains back in 2018.

Based on our findings, the question of whether debugging, reflection, and other such “System 2” approaches are useful for code generation remains open.12 It is possible that they will be useful on harder programming tasks than those represented in HumanEval. For now, the over-optimism about System 2 approaches is exacerbated by a lack of reproducibility and standardization that we report below.13

At first glance, reporting dollar costs is jarring. It breaks many properties of benchmarking that we take for granted: that measurements don’t change over time (whereas costs tend to come down) and that different models compete on a level playing field (whereas some developers may benefit from economies of scale, leading to lower inference costs). Because of this, researchers usually pick a different axis for the Pareto curve, such as parameter count.

The downsides of reporting costs are real, but we describe below how they can be mitigated. More importantly, we think using attributes like parameter count as a proxy for cost is a mistake and doesn’t solve the problem it’s intended to solve. To understand why, we need to introduce a conceptual distinction.

AI evaluations serve at least two distinct purposes. Model developers and AI researchers use them to identify which changes to the training data and architecture improve accuracy. We call this model evaluation. And downstream developers, such as programmers who use AI to build consumer-facing products, use evaluations to decide which AI systems to use in their products. We call this downstream evaluation.

The difference between model evaluation and downstream evaluation is underappreciated. This has led to much confusion about how to factor in the cost of running AI.

Model evaluation is a scientific question of interest to researchers. So it makes sense to stay away from dollar costs for the aforementioned reasons. Instead, controlling for compute is a reasonable approach: if we normalize the amount of compute used to train a model, we can then understand if factors like architectural changes or changes in the data composition are responsible for improvements, as opposed to more compute. Notably, Nathan Lambert argues that many of the accuracy gains in the last year (such as Meta's Llama 2) are simply consequences of using more compute.

On the other hand, downstream evaluation is an engineering question that helps inform a procurement decision. Here, cost is the actual construct of interest. The downsides of cost measurement aren’t downsides at all; they are exactly what’s needed. Inference costs do come down over time, and that greatly matters to downstream developers. It is unnecessary and counterproductive for the evaluation to stay frozen in time.

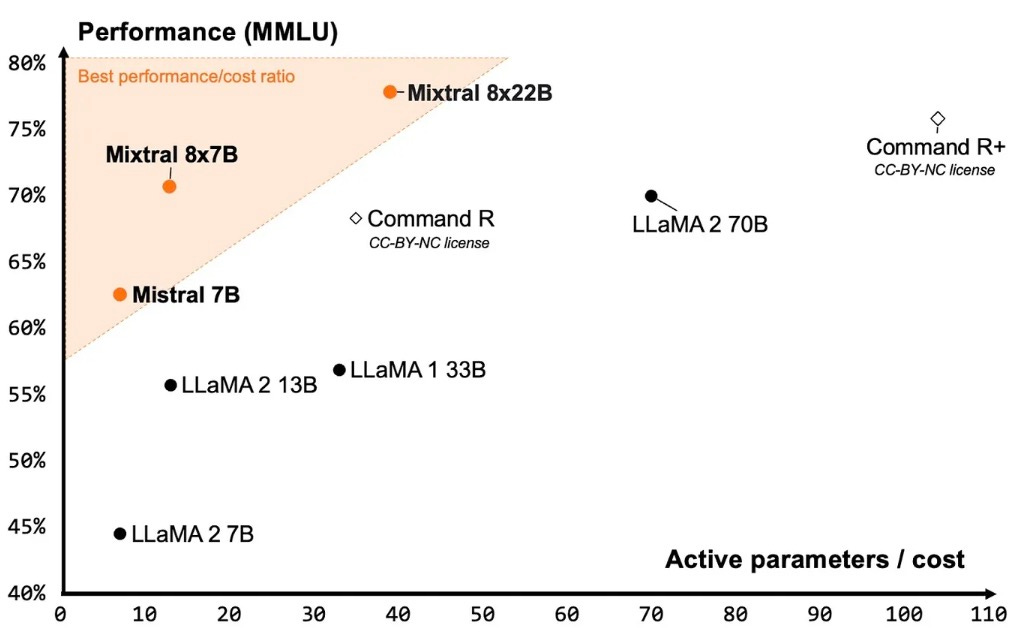

In this context, proxies for cost (such as the number of active parameters or amount of compute used) are misleading. For example, Mistral released the figure below alongside their latest model, Mixtral 8x22B, to explain why developers should choose it over competitors.

In this figure, the number of active parameters is a poor proxy for cost. On Anyscale, Mixtral 8x7B costs twice as much as Llama 2 13B, yet Mistral's figure shows it costs about the same, because they only consider the number of active parameters. Of course, downstream developers don't care about the number of active parameters when they're using an API. They simply care about the dollar cost relative to accuracy. Mistral chose “active parameters” as a proxy, presumably because it makes their models look better than dense models such as Meta’s Llama and Cohere’s Command R+. If we start using proxies for cost, every model developer can pick a proxy that makes their model look good.

Some hurdles to cost evaluation remain. Different providers can charge different amounts for the same model, the cost of an API call might change overnight, and cost might vary based on model developer decisions, such as whether bulk API calls are charged differently. These downsides can be partly addressed by making the evaluation results customizable using mechanisms to adjust the cost of running models, i.e., providing users the option to adjust the cost of input and output tokens for their provider of choice to recalculate the tradeoff between cost and accuracy. In turn, downstream evaluations of agents should include input/output token counts in addition to dollar costs, so that anyone looking at the evaluation in the future can instantly recalculate the cost using current prices.

But ultimately, despite the hurdles, good measurement requires modeling the underlying construct of interest. For downstream evaluations, that underlying construct is cost. All other proxies are lacking.

In the course of our evaluation, we found many shortcomings in the reproducibility and standardization of agent evaluations.

We were unable to reproduce the results of the LATS and LDB agents on HumanEval. In particular, across all 5 runs for LDB (Reflexion, GPT-3.5), the maximum accuracy was 91.5%, much lower than the 95.1% reported in the paper.14 The maximum accuracy of LATS across all five runs was similarly lower, at 91.5% instead of 94.4%.

Similarly, the accuracy for the baseline GPT-4 model reported in the LDB paper is drastically lower than our reproduction of the paper's code (75.0% vs. a mean of 89.6% across five runs). In fact, according to the paper, the GPT-3.5 and GPT-4 models perform very similarly (73.9% vs. 75.0%).15 Weak baselines could give a false sense of the amount of improvement attributable to the agent architecture.

The LATS agent was evaluated on only a subset of the test cases provided in the HumanEval benchmark. This exaggerated their accuracy numbers, since the code for a particular HumanEval problem might be incorrect, but if it passes only a portion of the test cases for that problem, it could still be marked as correct. In our analysis, this was responsible for a 3% difference in accuracy (mean across five runs), which explains a substantial part of the difference between the accuracy we found and the one reported in the paper. In addition, many details about the implementation, such as hyperparameter values, were not reported in the paper or GitHub repository (see appendix for details).

To the best of our knowledge, this post is the first time the four agents with the highest accuracy—Retry, Warming, LDB (GPT-4), and LDB (GPT-4 + Reflexion)—have been tested on HumanEval.16

Reflexion, LDB, and LATS all use different subsets of HumanEval. Three (out of 164) coding problems in the original version of HumanEval lack example tests. Since these agents require example tests to debug or rerun their solutions, Reflexion removes the three problems that don't have example tests. LATS removes these three problems, plus another problem, for unreported reasons.17 LDB adds example tests for the three problems that are missing in the original benchmark. None of the three papers reports this. The paper introducing LATS claims (incorrectly): "We use all 164 problems for our experiments."18 In our analysis, we conducted all evaluations on the version of the benchmark provided by LDB, since it contains example tests for all problems.

The LDB paper claims to use GPT-3.5 for code generation using Reflexion: "For Reflexion, we select the version based on GPT-3.5 and utilize the corresponding generated programs published in the official Github repository." However, the generated program they used from the Reflexion repository relies on GPT-4 for code generation, not GPT-3.5.19

These shortcomings in the empirical results have also led to errors of interpretation in broader discussions around the accuracy of AI agents. For example, a recent post by Andrew Ng claimed that agents that use GPT-3.5 can outperform GPT-4. In particular, he claimed:

[For HumanEval,] GPT-3.5 (zero shot) was 48.1% correct. GPT-4 (zero shot) does better at 67.0%. However, the improvement from GPT-3.5 to GPT-4 is dwarfed by incorporating an iterative agent workflow. Indeed, wrapped in an agent loop, GPT-3.5 achieves up to 95.1%.

While this claim received a lot of attention, it is incorrect. The claim ("GPT-3.5 wrapped in an agent workflow achieves 95.1% accuracy") seems to be about the LDB agent. The Papers With Code leaderboard for HumanEval makes the same claim. However, as we discussed above, for LDB, GPT-3.5 is only used to find bugs. The code is generated using GPT-4 (or the Reflexion agent that uses GPT-4), not GPT-3.5. Unfortunately, the error in the paper has led to much overoptimism about agents in the broader AI community.

Ng's post also makes the familiar error of repeating results from papers without verifying them or accounting for changes in prompts and model versions. For example, the zero-shot accuracy numbers of GPT-3.5 (48.1%) and GPT-4 (67.0%) seem to be copied from the GPT-4 technical report from March 2023. However, the models have been updated many times since release. Indeed, in our comparison, we find that the base models perform much better compared to the claimed figures in Ng's post when we use them with the prompts provided with the LDB paper (GPT-3.5: 73.9%, GPT-4: 89.6%). As a result, the post drastically overestimates the improvement attributable to agent architectures.

Evaluation frameworks like Stanford's HELM and EleutherAI's LM Evaluation Harness attempt to fix similar shortcomings for model evaluations, by providing standardized evaluation results. We are working on solutions to make agent evaluations standardized and reproducible, especially from the perspective of downstream evaluation of agents.

Finally, downstream developers should keep in mind that HumanEval or any other standardized benchmark is nothing more than a rough proxy for the specific tasks that arise in a particular downstream application. To understand how agents will perform in practice, it is necessary to evaluate them on a custom dataset from the domain of interest — or even better, A/B test different agents in the production environment.

Zaharia et al. observe that state-of-the-art accuracy on AI benchmarks is often attained by composite systems. If the adoption of agents continues, visualizing cost and accuracy as a Pareto curve would become even more necessary.

Santhanam et al. point out the importance of evaluating cost alongside accuracy for information retrieval benchmarks.

Ozrmazabal et al. highlight the accuracy vs. cost per output token tradeoffs for various models (but not agents) on MMLU. While the cost of output tokens might not be a good indicator of the overall cost, given the varying input token costs as well as output lengths for different models, it is better than not reporting the tradeoffs at all.

The Berkeley Function Calling leaderboard includes various metrics for language model evaluations of function calling, including cost and latency.

Xie et al. develop OSWorld, a benchmark for evaluating agents in computer environments. In their GitHub repository (though not in the paper), they give a rough cost estimate for running various multimodal agents on their benchmark.

Unsurprisingly, the main impetus for cost vs. accuracy tradeoffs has come from the downstream developers who use AI.

In a previous talk, we discussed three major pitfalls in LLM evaluation: prompt sensitivity, construct validity, and contamination. The current research is largely orthogonal: prompt sensitivity isn’t a concern for agent evaluation (as agents are allowed to define their own prompts); downstream developers can address contamination and construct validity by evaluating on custom datasets.

The code for reproducing our analysis is available here. The appendix includes more details about our setup and results.

We thank Rishi Bommasani, Rumman Chowdhury, Percy Liang, Shayne Longpre, Yifan Mai, Nitya Nadgir, Matt Salganik, Hailey Schoelkopf, Zachary Siegel, and Venia Veselovsky for discussions and inputs that informed our analysis. We acknowledge Cunxiang Wang and Ruoxi Ning for their prompt responses to our questions about the NovelQA benchmark.

We are grateful to the authors of the papers we engage with in this post for their quick responses and for sharing their code, which makes such reproduction analysis possible in the first place. In particular, we are grateful to Zilong Wang (LDB), Andy Zhou (LATS), and Karthik Narasimhan (Reflexion), who gave us feedback in response to an earlier draft of this blog post.